How Music Apps Communicate

Any programming language , software or computer will generally use “32 or 64 bit” compiled libraries, SDKs, frameworks etc.

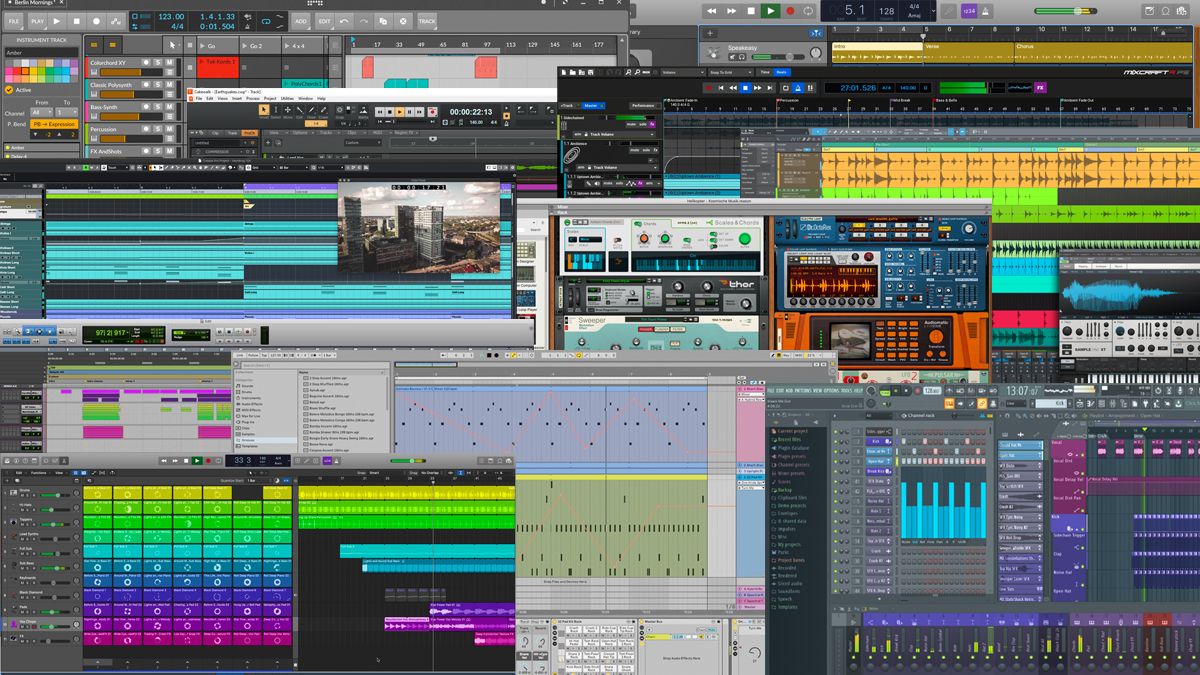

There are several ways apps already communicate or share information. In terms of audio and plugins, they use protocols like VST/AU that have predetermined guidelines set by the developers. The hierarchy works it’s way down the chain. Essentially, how they communicate is just protocols, APIs etc… aka rules.

Example with DAW plugin:

1. Windows makes a “Core Audio Framework” which is how sound is handled by the operating system. It plays a role in how a software will process a buffer(s) to the audio driver. Developers have access to this.

2. A Host DAW uses the VST protocol to render plugins and process it accordingly; feeding audio , automation, presets etc. The host is dependent on guidelines set by the OS audio framework (core audio) in order to output to the speakers etc.

3. VST is made by Steinberg allowing a common method to render a processor in an signal chain. The guidelines are respected by majority of DAWs. A newer type of plugin protocol would be AUv3 on iOS. The protocol is still nowhere near as flushed out as it’s predecessors.

4. The plugin developer needs to follow the guidelines presented in the VST/AU protocols in order for the plugin to function properly. Otherwise, it might not recall presets or work with automation etc.

5. The sound ultimately reaches the speakers by the Host using the OS core audio framework and audio drivers.Note – Any inter application audio will use the functionality offered Core Audio and other methods of communication.Hope that answered you question (?) Enjoyed answering this to be used for a blog piece.